Anthropic's Claude AI has emerged as a leading ethically-focused artificial intelligence platform, challenging industry giants like ChatGPT and Google Gemini with its unique "Constitutional AI" framework. Founded in 2021 by former OpenAI executives Dario and Daniela Amodei, Anthropic prioritizes societal benefit over profit, establishing a safety-first approach that has made Claude the preferred choice for software developers and professionals handling complex technical tasks. With a massive 200,000-token context window and a reputation for honesty and reduced hallucinations, Claude represents both the promise and peril of AI—offering powerful capabilities while raising critical questions about humanity's role in an increasingly automated future.

The Genesis of a Different Kind of AI

Anthropic was founded in 2021 by former OpenAI executives Dario and Daniela Amodei, who left their former company due to concerns regarding the rapid commercialization and safety of powerful AI systems. Their vision was to create a Public Benefit Corporation that prioritized societal benefit over pure profit, a commitment evidenced by their unique "Constitutional AI" framework.

In 2026, Anthropic significantly updated this "constitution," shifting from a rigid checklist of rules to a more holistic, values-based approach. This internal set of principles guides the model's behavior, establishing a clear hierarchy of priorities:

- Broad Safety: Ensuring the AI does not undermine human oversight or assist in catastrophic actions.

- Ethics: Acting as a "good person" would—prioritizing honesty and wisdom.

- Policy Compliance: Following Anthropic's specific operational guidelines.

- Helpfulness: Finally, aiming to be as useful as possible to the end-user.

Real-World Performance: Where Claude Excels

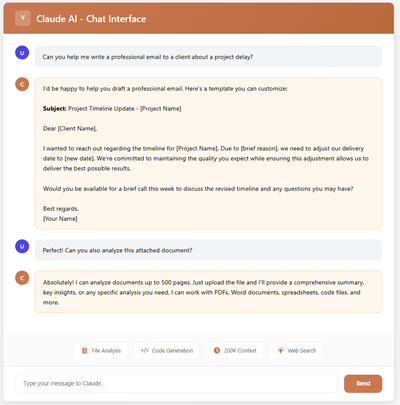

For professionals and creators alike, Claude AI has become a go-to tool for high-complexity tasks. It is particularly renowned for its massive 200,000-token context window, which allows it to process and analyze hundreds of pages of technical documentation or massive codebases in a single session.

Coding Mastery

Claude is frequently rated as the top choice for software developers, with high adoption rates due to its ability to write clean, idiomatic code and debug subtle logic errors that other models miss.

Nuance and Tone

Unlike the often clinical tone of its competitors, Claude is praised for its ability to follow complex instructions with a more natural, human-like writing style.

Reduced Hallucinations

Because it is trained to be "honest," Claude is more likely to admit when it does not know an answer rather than confidently providing false information.

Feature Comparison

| Feature | Claude 4.5 | ChatGPT o3 | Google Gemini 3.0 |

|---|---|---|---|

| Best For | Coding & Long Docs | Creative & General | Google Ecosystem |

| Context Window | 200,000 Tokens | 128,000 Tokens | Up to 2,000,000 Tokens |

| Safety Approach | Constitutional AI | Human Feedback (RLHF) | Integrated Filters |

| Free Tier | Available | Available | Most Generous |

Accessibility and Pricing

Claude is highly accessible, offering a dedicated Windows application (installed via a modern MSIX package) that allows it to live directly on a user's desktop. The interface is designed for seamless integration into daily workflows, providing a clean and intuitive experience for users across all skill levels.

For those looking to integrate it into their daily workflows, Anthropic offers several tiers:

- Free: Limited access to the latest models.

- Pro ($20/month): Provides 5x more usage than the free tier and early access to new features.

- Team/Enterprise ($25–$100+): Designed for businesses requiring higher security and collaborative features.

The Philosophical Dilemma: While the technical achievements of Claude are remarkable, we cannot ignore the "alarm" that AI causes in global economies.

The Philosophical Dilemma: A Threat to Humanity?

While the technical achievements of Claude AI are remarkable, we cannot ignore the "alarm" that artificial intelligence causes in global economies. In 2026, leaders at the World Economic Forum warned that job displacement from AI could outpace society's ability to adapt, with industries from finance to customer service already seeing a shift toward automation.

The power AI holds is significant, and the fear that we might eventually "give up on humanity" as the tables turn is a valid concern. If we allow AI to manage every facet of life—whether in robot form or through invisible algorithms—without maintaining strict human involvement, we risk losing the very creativity and empathy that defines us.

Conclusion: Keeping Humanity in the Loop

The consensus among governance experts in 2026 is that "Human-in-the-Loop" (HITL) oversight is no longer optional; it is a necessity for survival. We must ensure that big tech companies do not operate in a vacuum, but rather within a framework of public-private partnerships that prioritize human agency.

Claude AI, with its safety-first constitution, represents a step toward this more responsible future. However, the final decision-making power must remain with us. As we integrate these "brilliant friends" into our daily lives, we must remain vigilant stewards of our own destiny, ensuring that artificial intelligence serves as a collaborator that elevates our potential rather than a replacement that diminishes our humanity.